ot.optim

Generic solvers for regularized OT or its semi-relaxed version.

Functions

- ot.optim.cg(a, b, M, reg, f, df, G0=None, line_search=None, numItermax=200, numItermaxEmd=100000, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, nx=None, **kwargs)[source]

Solve the general regularized OT problem with conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is conditional gradient as discussed in [1]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – samples in the target domain

M (array-like, shape (ns, nt)) – loss matrix

reg (float) – Regularization term >0

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

line_search (function,) – Function to find the optimal step. Default is None and calls a wrapper to line_search_armijo.

numItermax (int, optional) – Max number of iterations

numItermaxEmd (int, optional) – Max number of iterations for emd

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

nx (backend, optional) – If let to its default value None, the backend will be deduced from other inputs.

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

See also

ot.lp.emdUnregularized optimal transport

ot.bregman.sinkhornEntropic regularized optimal transport

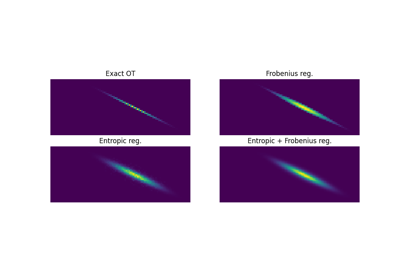

Examples using ot.optim.cg

- ot.optim.gcg(a, b, M, reg1, reg2, f, df, G0=None, numItermax=10, numInnerItermax=200, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, **kwargs)[source]

Solve the general regularized OT problem with the generalized conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg_1}\cdot\Omega(\gamma) + \mathrm{reg_2}\cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(\Omega\) is the entropic regularization term \(\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})\)

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is the generalized conditional gradient as discussed in [5, 7]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, (nt,)) – samples in the target domain

M (array-like, shape (ns, nt)) – loss matrix

reg1 (float) – Entropic Regularization term >0

reg2 (float) – Second Regularization term >0

G0 (array-like, shape (ns, nt), optional) – initial guess (default is indep joint density)

numItermax (int, optional) – Max number of iterations

numInnerItermax (int, optional) – Max number of iterations of Sinkhorn

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

- Returns:

gamma (ndarray, shape (ns, nt)) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[5] N. Courty; R. Flamary; D. Tuia; A. Rakotomamonjy, “Optimal Transport for Domain Adaptation,” in IEEE Transactions on Pattern Analysis and Machine Intelligence , vol.PP, no.99, pp.1-1

[7] Rakotomamonjy, A., Flamary, R., & Courty, N. (2015). Generalized conditional gradient: analysis of convergence and applications. arXiv preprint arXiv:1510.06567.

See also

ot.optim.cgconditional gradient

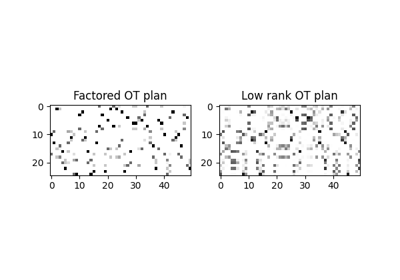

Examples using ot.optim.gcg

- ot.optim.generic_conditional_gradient(a, b, M, f, df, reg1, reg2, lp_solver, line_search, G0=None, numItermax=200, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, nx=None, **kwargs)[source]

Solve the general regularized OT problem or its semi-relaxed version with conditional gradient or generalized conditional gradient depending on the provided linear program solver.

The function solves the following optimization problem if set as a conditional gradient:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg_1} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b} (optional constraint)\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is conditional gradient as discussed in [1]

The function solves the following optimization problem if set a generalized conditional gradient:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg_1}\cdot f(\gamma) + \mathrm{reg_2}\cdot\Omega(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\Omega\) is the entropic regularization term \(\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})\)

The algorithm used for solving the problem is the generalized conditional gradient as discussed in [5, 7]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – samples weights in the target domain

M (array-like, shape (ns, nt)) – loss matrix

f (function) – Regularization function taking a transportation matrix as argument

df (function) – Gradient of the regularization function taking a transportation matrix as argument

reg1 (float) – Regularization term >0

reg2 (float,) – Entropic Regularization term >0. Ignored if set to None.

lp_solver (function,) –

linear program solver for direction finding of the (generalized) conditional gradient. This function must take the form lp_solver(a, b, Mi, **kwargs) with p: a and b are sample weights in both domains; Mi is the gradient of the regularized objective; optimal arguments via kwargs. It must output an admissible transport plan.

For instance, for the general regularized OT problem with conditional gradient [1]:

- def lp_solver(a, b, M, **kwargs):

return ot.emd(a, b, M)

or with the generalized conditional gradient instead [5, 7]:

- def lp_solver(a, b, Mi, **kwargs):

return ot.sinkhorn(a, b, Mi)

line_search (function,) –

Function to find the optimal step. This function must take the form line_search(cost, G, deltaG, Mi, cost_G, df_G, **kwargs) with: cost the cost function, G the transport plan, deltaG the conditional gradient direction given by lp_solver, Mi the gradient of regularized objective, cost_G the cost at G, df_G the gradient of the regularizer at G. Two types of outputs are supported:

Instances such as ot.optim.line_search_armijo (generic solver), ot.gromov.solve_gromov_linesearch (FGW problems), solve_semirelaxed_gromov_linesearch (srFGW problems) and gcg_linesearch (generalized cg), output : the line-search step alpha, the number of iterations used in the solver if applicable and the loss value at step alpha. These can be called e.g as:

Instances such as ot.gromov.solve_partial_gromov_linesearch for partial (F)GW problems add as finale output, the next step gradient reading as a convex combination of previously computed gradients, taking advantage of the regularizer quadratic form.

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

numItermax (int, optional) – Max number of iterations

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

nx (backend, optional) – If let to its default value None, the backend will be deduced from other inputs.

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[1] Ferradans, S., Papadakis, N., Peyré, G., & Aujol, J. F. (2014). Regularized discrete optimal transport. SIAM Journal on Imaging Sciences, 7(3), 1853-1882.

[5] N. Courty; R. Flamary; D. Tuia; A. Rakotomamonjy, “Optimal Transport for Domain Adaptation,” in IEEE Transactions on Pattern Analysis and Machine Intelligence , vol.PP, no.99, pp.1-1

[7] Rakotomamonjy, A., Flamary, R., & Courty, N. (2015). Generalized conditional gradient: analysis of convergence and applications. arXiv preprint arXiv:1510.06567.

See also

ot.lp.emdUnregularized optimal transport

ot.bregman.sinkhornEntropic regularized optimal transport

- ot.optim.line_search_armijo(f, xk, pk, gfk, old_fval, args=(), c1=0.0001, alpha0=0.99, alpha_min=0.0, alpha_max=None, nx=None, **kwargs)[source]

Armijo linesearch function that works with matrices

Find an approximate minimum of \(f(x_k + \alpha \cdot p_k)\) that satisfies the armijo conditions.

Note

If the loss function f returns a float (resp. a 1d array) then the returned alpha and fa are float (resp. 1d arrays).

- Parameters:

f (callable) – loss function

xk (array-like) – initial position

pk (array-like) – descent direction

gfk (array-like) – gradient of f at \(x_k\)

old_fval (float or 1d array) – loss value at \(x_k\)

args (tuple, optional) – arguments given to f

c1 (float, optional) – \(c_1\) const in armijo rule (>0)

alpha0 (float, optional) – initial step (>0)

alpha_min (float, default=0.) – minimum value for alpha

alpha_max (float, optional) – maximum value for alpha

nx (backend, optional) – If let to its default value None, a backend test will be conducted.

- Returns:

alpha (float or 1d array) – step that satisfy armijo conditions

fc (int) – nb of function call

fa (float or 1d array) – loss value at step alpha

- ot.optim.partial_cg(a, b, a_extended, b_extended, M, reg, f, df, G0=None, line_search=<function line_search_armijo>, numItermax=200, stopThr=1e-09, stopThr2=1e-09, warn=True, verbose=False, log=False, **kwargs)[source]

Solve the general regularized partial OT problem with conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma \mathbf{1} &= \mathbf{b}\\ \mathbf{1}^T \gamma^T \mathbf{1} = m &\leq \min\{\|\mathbf{p}\|_1, \|\mathbf{q}\|_1\}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights

m is the amount of mass to be transported

The algorithm used for solving the problem is conditional gradient as discussed in [1]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – currently estimated samples weights in the target domain

a_extended (array-like, shape (ns + nb_dummies,)) – samples weights in the source domain with added dummy nodes

b_extended (array-like, shape (nt + nb_dummies,)) – currently estimated samples weights in the target domain with added dummy nodes

M (array-like, shape (ns, nt)) – loss matrix

reg (float) – Regularization term >0

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

line_search (function,) – Function to find the optimal step. Default is the armijo line-search.

numItermax (int, optional) – Max number of iterations

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

warn (bool, optional.) – Whether to raise a warning when EMD did not converge.

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[29] Chapel, L., Alaya, M., Gasso, G. (2020). “Partial Optimal Transport with Applications on Positive-Unlabeled Learning”. NeurIPS.

- ot.optim.semirelaxed_cg(a, b, M, reg, f, df, G0=None, line_search=None, numItermax=200, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, nx=None, **kwargs)[source]

Solve the general regularized and semi-relaxed OT problem with conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is conditional gradient as discussed in [1]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – currently estimated samples weights in the target domain

M (array-like, shape (ns, nt)) – loss matrix

reg (float) – Regularization term >0

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

line_search (function,) – Function to find the optimal step. Default is None and calls a wrapper to line_search_armijo.

numItermax (int, optional) – Max number of iterations

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

nx (backend, optional) – If let to its default value None, the backend will be deduced from other inputs.

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[48] Cédric Vincent-Cuaz, Rémi Flamary, Marco Corneli, Titouan Vayer, Nicolas Courty. “Semi-relaxed Gromov-Wasserstein divergence and applications on graphs” International Conference on Learning Representations (ICLR), 2021.

- ot.optim.solve_1d_linesearch_quad(a, b)[source]

For any convex or non-convex 1d quadratic function f, solve the following problem:

\[\mathop{\arg \min}_{0 \leq x \leq 1} \quad f(x) = ax^{2} + bx + c\]

- ot.optim.cg(a, b, M, reg, f, df, G0=None, line_search=None, numItermax=200, numItermaxEmd=100000, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, nx=None, **kwargs)[source]

Solve the general regularized OT problem with conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is conditional gradient as discussed in [1]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – samples in the target domain

M (array-like, shape (ns, nt)) – loss matrix

reg (float) – Regularization term >0

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

line_search (function,) – Function to find the optimal step. Default is None and calls a wrapper to line_search_armijo.

numItermax (int, optional) – Max number of iterations

numItermaxEmd (int, optional) – Max number of iterations for emd

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

nx (backend, optional) – If let to its default value None, the backend will be deduced from other inputs.

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[1] Ferradans, S., Papadakis, N., Peyré, G., & Aujol, J. F. (2014). Regularized discrete optimal transport. SIAM Journal on Imaging Sciences, 7(3), 1853-1882.

See also

ot.lp.emdUnregularized optimal transport

ot.bregman.sinkhornEntropic regularized optimal transport

- ot.optim.gcg(a, b, M, reg1, reg2, f, df, G0=None, numItermax=10, numInnerItermax=200, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, **kwargs)[source]

Solve the general regularized OT problem with the generalized conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg_1}\cdot\Omega(\gamma) + \mathrm{reg_2}\cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(\Omega\) is the entropic regularization term \(\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})\)

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is the generalized conditional gradient as discussed in [5, 7]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, (nt,)) – samples in the target domain

M (array-like, shape (ns, nt)) – loss matrix

reg1 (float) – Entropic Regularization term >0

reg2 (float) – Second Regularization term >0

G0 (array-like, shape (ns, nt), optional) – initial guess (default is indep joint density)

numItermax (int, optional) – Max number of iterations

numInnerItermax (int, optional) – Max number of iterations of Sinkhorn

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

- Returns:

gamma (ndarray, shape (ns, nt)) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[5] N. Courty; R. Flamary; D. Tuia; A. Rakotomamonjy, “Optimal Transport for Domain Adaptation,” in IEEE Transactions on Pattern Analysis and Machine Intelligence , vol.PP, no.99, pp.1-1

[7] Rakotomamonjy, A., Flamary, R., & Courty, N. (2015). Generalized conditional gradient: analysis of convergence and applications. arXiv preprint arXiv:1510.06567.

See also

ot.optim.cgconditional gradient

- ot.optim.generic_conditional_gradient(a, b, M, f, df, reg1, reg2, lp_solver, line_search, G0=None, numItermax=200, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, nx=None, **kwargs)[source]

Solve the general regularized OT problem or its semi-relaxed version with conditional gradient or generalized conditional gradient depending on the provided linear program solver.

The function solves the following optimization problem if set as a conditional gradient:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg_1} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b} (optional constraint)\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is conditional gradient as discussed in [1]

The function solves the following optimization problem if set a generalized conditional gradient:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg_1}\cdot f(\gamma) + \mathrm{reg_2}\cdot\Omega(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma^T \mathbf{1} &= \mathbf{b}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\Omega\) is the entropic regularization term \(\Omega(\gamma)=\sum_{i,j} \gamma_{i,j}\log(\gamma_{i,j})\)

The algorithm used for solving the problem is the generalized conditional gradient as discussed in [5, 7]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – samples weights in the target domain

M (array-like, shape (ns, nt)) – loss matrix

f (function) – Regularization function taking a transportation matrix as argument

df (function) – Gradient of the regularization function taking a transportation matrix as argument

reg1 (float) – Regularization term >0

reg2 (float,) – Entropic Regularization term >0. Ignored if set to None.

lp_solver (function,) –

linear program solver for direction finding of the (generalized) conditional gradient. This function must take the form lp_solver(a, b, Mi, **kwargs) with p: a and b are sample weights in both domains; Mi is the gradient of the regularized objective; optimal arguments via kwargs. It must output an admissible transport plan.

For instance, for the general regularized OT problem with conditional gradient [1]:

- def lp_solver(a, b, M, **kwargs):

return ot.emd(a, b, M)

or with the generalized conditional gradient instead [5, 7]:

- def lp_solver(a, b, Mi, **kwargs):

return ot.sinkhorn(a, b, Mi)

line_search (function,) –

Function to find the optimal step. This function must take the form line_search(cost, G, deltaG, Mi, cost_G, df_G, **kwargs) with: cost the cost function, G the transport plan, deltaG the conditional gradient direction given by lp_solver, Mi the gradient of regularized objective, cost_G the cost at G, df_G the gradient of the regularizer at G. Two types of outputs are supported:

Instances such as ot.optim.line_search_armijo (generic solver), ot.gromov.solve_gromov_linesearch (FGW problems), solve_semirelaxed_gromov_linesearch (srFGW problems) and gcg_linesearch (generalized cg), output : the line-search step alpha, the number of iterations used in the solver if applicable and the loss value at step alpha. These can be called e.g as:

Instances such as ot.gromov.solve_partial_gromov_linesearch for partial (F)GW problems add as finale output, the next step gradient reading as a convex combination of previously computed gradients, taking advantage of the regularizer quadratic form.

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

numItermax (int, optional) – Max number of iterations

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

nx (backend, optional) – If let to its default value None, the backend will be deduced from other inputs.

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[1] Ferradans, S., Papadakis, N., Peyré, G., & Aujol, J. F. (2014). Regularized discrete optimal transport. SIAM Journal on Imaging Sciences, 7(3), 1853-1882.

[5] N. Courty; R. Flamary; D. Tuia; A. Rakotomamonjy, “Optimal Transport for Domain Adaptation,” in IEEE Transactions on Pattern Analysis and Machine Intelligence , vol.PP, no.99, pp.1-1

[7] Rakotomamonjy, A., Flamary, R., & Courty, N. (2015). Generalized conditional gradient: analysis of convergence and applications. arXiv preprint arXiv:1510.06567.

See also

ot.lp.emdUnregularized optimal transport

ot.bregman.sinkhornEntropic regularized optimal transport

- ot.optim.line_search_armijo(f, xk, pk, gfk, old_fval, args=(), c1=0.0001, alpha0=0.99, alpha_min=0.0, alpha_max=None, nx=None, **kwargs)[source]

Armijo linesearch function that works with matrices

Find an approximate minimum of \(f(x_k + \alpha \cdot p_k)\) that satisfies the armijo conditions.

Note

If the loss function f returns a float (resp. a 1d array) then the returned alpha and fa are float (resp. 1d arrays).

- Parameters:

f (callable) – loss function

xk (array-like) – initial position

pk (array-like) – descent direction

gfk (array-like) – gradient of f at \(x_k\)

old_fval (float or 1d array) – loss value at \(x_k\)

args (tuple, optional) – arguments given to f

c1 (float, optional) – \(c_1\) const in armijo rule (>0)

alpha0 (float, optional) – initial step (>0)

alpha_min (float, default=0.) – minimum value for alpha

alpha_max (float, optional) – maximum value for alpha

nx (backend, optional) – If let to its default value None, a backend test will be conducted.

- Returns:

alpha (float or 1d array) – step that satisfy armijo conditions

fc (int) – nb of function call

fa (float or 1d array) – loss value at step alpha

- ot.optim.partial_cg(a, b, a_extended, b_extended, M, reg, f, df, G0=None, line_search=<function line_search_armijo>, numItermax=200, stopThr=1e-09, stopThr2=1e-09, warn=True, verbose=False, log=False, **kwargs)[source]

Solve the general regularized partial OT problem with conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma \mathbf{1} &= \mathbf{b}\\ \mathbf{1}^T \gamma^T \mathbf{1} = m &\leq \min\{\|\mathbf{p}\|_1, \|\mathbf{q}\|_1\}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights

m is the amount of mass to be transported

The algorithm used for solving the problem is conditional gradient as discussed in [1]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – currently estimated samples weights in the target domain

a_extended (array-like, shape (ns + nb_dummies,)) – samples weights in the source domain with added dummy nodes

b_extended (array-like, shape (nt + nb_dummies,)) – currently estimated samples weights in the target domain with added dummy nodes

M (array-like, shape (ns, nt)) – loss matrix

reg (float) – Regularization term >0

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

line_search (function,) – Function to find the optimal step. Default is the armijo line-search.

numItermax (int, optional) – Max number of iterations

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

warn (bool, optional.) – Whether to raise a warning when EMD did not converge.

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[29] Chapel, L., Alaya, M., Gasso, G. (2020). “Partial Optimal Transport with Applications on Positive-Unlabeled Learning”. NeurIPS.

- ot.optim.semirelaxed_cg(a, b, M, reg, f, df, G0=None, line_search=None, numItermax=200, stopThr=1e-09, stopThr2=1e-09, verbose=False, log=False, nx=None, **kwargs)[source]

Solve the general regularized and semi-relaxed OT problem with conditional gradient

The function solves the following optimization problem:

\[ \begin{align}\begin{aligned}\gamma = \mathop{\arg \min}_\gamma \quad \langle \gamma, \mathbf{M} \rangle_F + \mathrm{reg} \cdot f(\gamma)\\s.t. \ \gamma \mathbf{1} &= \mathbf{a}\\ \gamma &\geq 0\end{aligned}\end{align} \]where :

\(\mathbf{M}\) is the (ns, nt) metric cost matrix

\(f\) is the regularization term (and df is its gradient)

\(\mathbf{a}\) and \(\mathbf{b}\) are source and target weights (sum to 1)

The algorithm used for solving the problem is conditional gradient as discussed in [1]

- Parameters:

a (array-like, shape (ns,)) – samples weights in the source domain

b (array-like, shape (nt,)) – currently estimated samples weights in the target domain

M (array-like, shape (ns, nt)) – loss matrix

reg (float) – Regularization term >0

G0 (array-like, shape (ns,nt), optional) – initial guess (default is indep joint density)

line_search (function,) – Function to find the optimal step. Default is None and calls a wrapper to line_search_armijo.

numItermax (int, optional) – Max number of iterations

stopThr (float, optional) – Stop threshold on the relative variation (>0)

stopThr2 (float, optional) – Stop threshold on the absolute variation (>0)

verbose (bool, optional) – Print information along iterations

log (bool, optional) – record log if True

nx (backend, optional) – If let to its default value None, the backend will be deduced from other inputs.

**kwargs (dict) – Parameters for linesearch

- Returns:

gamma ((ns x nt) ndarray) – Optimal transportation matrix for the given parameters

log (dict) – log dictionary return only if log==True in parameters

References

[48] Cédric Vincent-Cuaz, Rémi Flamary, Marco Corneli, Titouan Vayer, Nicolas Courty. “Semi-relaxed Gromov-Wasserstein divergence and applications on graphs” International Conference on Learning Representations (ICLR), 2021.