Note

Go to the end to download the full example code.

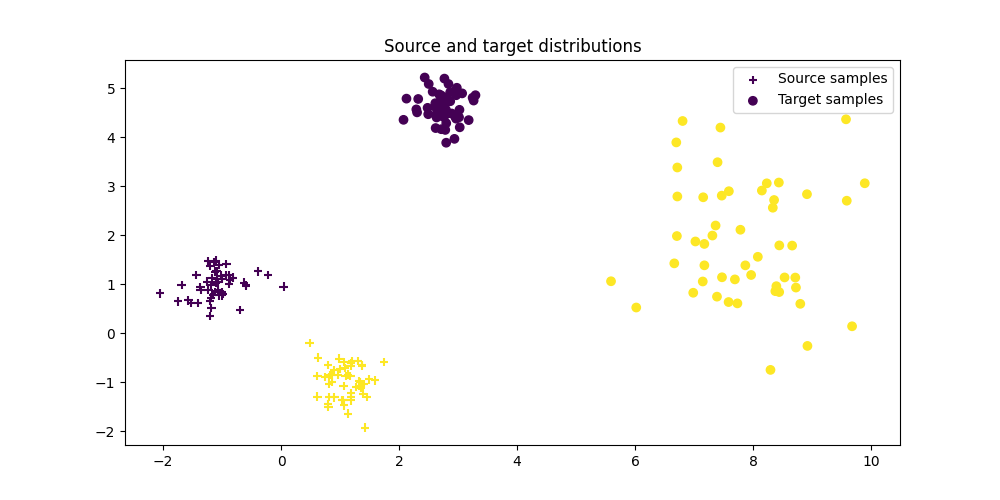

OT mapping estimation for domain adaptation

Note

Example added in release: 0.1.9.

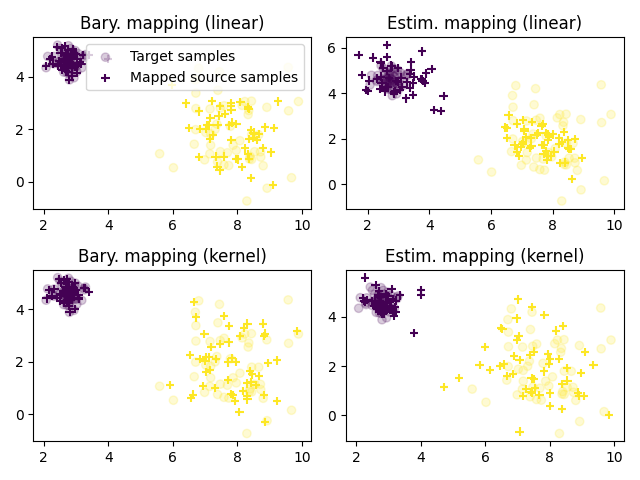

This example presents how to use MappingTransport to estimate at the same time both the coupling transport and approximate the transport map with either a linear or a kernelized mapping as introduced in [8].

[8] M. Perrot, N. Courty, R. Flamary, A. Habrard, “Mapping estimation for discrete optimal transport”, Neural Information Processing Systems (NIPS), 2016.

# Authors: Remi Flamary <remi.flamary@unice.fr>

# Stanislas Chambon <stan.chambon@gmail.com>

#

# License: MIT License

# sphinx_gallery_thumbnail_number = 2

import numpy as np

import matplotlib.pylab as pl

import ot

Generate data

n_source_samples = 100

n_target_samples = 100

theta = 2 * np.pi / 20

noise_level = 0.1

Xs, ys = ot.datasets.make_data_classif("gaussrot", n_source_samples, nz=noise_level)

Xs_new, _ = ot.datasets.make_data_classif("gaussrot", n_source_samples, nz=noise_level)

Xt, yt = ot.datasets.make_data_classif(

"gaussrot", n_target_samples, theta=theta, nz=noise_level

)

# one of the target mode changes its variance (no linear mapping)

Xt[yt == 2] *= 3

Xt = Xt + 4

Plot data

Text(0.5, 1.0, 'Source and target distributions')

Instantiate the different transport algorithms and fit them

# MappingTransport with linear kernel

ot_mapping_linear = ot.da.MappingTransport(

kernel="linear", mu=1e0, eta=1e-8, bias=True, max_iter=20, verbose=True

)

ot_mapping_linear.fit(Xs=Xs, Xt=Xt)

# for original source samples, transform applies barycentric mapping

transp_Xs_linear = ot_mapping_linear.transform(Xs=Xs)

# for out of source samples, transform applies the linear mapping

transp_Xs_linear_new = ot_mapping_linear.transform(Xs=Xs_new)

# MappingTransport with gaussian kernel

ot_mapping_gaussian = ot.da.MappingTransport(

kernel="gaussian", eta=1e-5, mu=1e-1, bias=True, sigma=1, max_iter=10, verbose=True

)

ot_mapping_gaussian.fit(Xs=Xs, Xt=Xt)

# for original source samples, transform applies barycentric mapping

transp_Xs_gaussian = ot_mapping_gaussian.transform(Xs=Xs)

# for out of source samples, transform applies the gaussian mapping

transp_Xs_gaussian_new = ot_mapping_gaussian.transform(Xs=Xs_new)

It. |Loss |Delta loss

--------------------------------

0|4.190105e+03|0.000000e+00

1|4.170411e+03|-4.700201e-03

2|4.169845e+03|-1.356805e-04

3|4.169664e+03|-4.344581e-05

4|4.169558e+03|-2.549048e-05

5|4.169490e+03|-1.619901e-05

6|4.169453e+03|-8.982881e-06

It. |Loss |Delta loss

--------------------------------

0|4.207356e+02|0.000000e+00

1|4.153604e+02|-1.277552e-02

2|4.150590e+02|-7.257432e-04

3|4.149197e+02|-3.356453e-04

4|4.148198e+02|-2.408251e-04

5|4.147508e+02|-1.661834e-04

6|4.147001e+02|-1.223502e-04

7|4.146607e+02|-9.506358e-05

8|4.146269e+02|-8.141766e-05

9|4.145989e+02|-6.750100e-05

10|4.145770e+02|-5.283163e-05

Plot transported samples

pl.figure(2)

pl.clf()

pl.subplot(2, 2, 1)

pl.scatter(Xt[:, 0], Xt[:, 1], c=yt, marker="o", label="Target samples", alpha=0.2)

pl.scatter(

transp_Xs_linear[:, 0],

transp_Xs_linear[:, 1],

c=ys,

marker="+",

label="Mapped source samples",

)

pl.title("Bary. mapping (linear)")

pl.legend(loc=0)

pl.subplot(2, 2, 2)

pl.scatter(Xt[:, 0], Xt[:, 1], c=yt, marker="o", label="Target samples", alpha=0.2)

pl.scatter(

transp_Xs_linear_new[:, 0],

transp_Xs_linear_new[:, 1],

c=ys,

marker="+",

label="Learned mapping",

)

pl.title("Estim. mapping (linear)")

pl.subplot(2, 2, 3)

pl.scatter(Xt[:, 0], Xt[:, 1], c=yt, marker="o", label="Target samples", alpha=0.2)

pl.scatter(

transp_Xs_gaussian[:, 0],

transp_Xs_gaussian[:, 1],

c=ys,

marker="+",

label="barycentric mapping",

)

pl.title("Bary. mapping (kernel)")

pl.subplot(2, 2, 4)

pl.scatter(Xt[:, 0], Xt[:, 1], c=yt, marker="o", label="Target samples", alpha=0.2)

pl.scatter(

transp_Xs_gaussian_new[:, 0],

transp_Xs_gaussian_new[:, 1],

c=ys,

marker="+",

label="Learned mapping",

)

pl.title("Estim. mapping (kernel)")

pl.tight_layout()

pl.show()

Total running time of the script: (0 minutes 0.982 seconds)